Among the display interfaces currently on the market, VGA and DVI have gradually withdrawn from the stage of history. Type-C is still a niche, while DP (DisplayPort) and HDMI have become standard features of mainstream products. Currently, Mainstream graphics cards also use these two output interfaces. A new question arises: When both interfaces can be use, which one is better? Let’s discuss about DP vs HDMI

For most ordinary consumers, it is enough that the monitor can be connected to the host normally. It does not matter which interface is used. It can be used normally anyway. But for DIY players, this issue is very important. But it has nothing to do with the performance of the graphics card and monitor. Compared with parameters, this is another issue that is easily overlooked.

Especially in high-end monitors equipped with features such as FreeS, HDR, high resolution (above 4K). And high refresh rate (above 144Hz), there are usually differences between the two interfaces when connecting to the same device. If you cannot choose the best connection method, Various compatibility issues may arise that prevent the graphics card and monitor from performing at their best.

To know how to make a choice that suits us, we must first re-understand the two interfaces, DP and HDMI.

The development history of DP and HDMI

From the perspective of development history, HDMI is considered a predecessor. The first standard came out as early as 2002. The latest standard protocol version is HDMI2.1, while the DP interface is a latecomer. The first standard appeared in 2006 and the current latest standard is The protocol version is DP2.0. These two interfaces are a sign that the video transmission interface has fully entered the digital era. Although the previous DVI interface also used digital signals for transmission, it could not carry audio and data streams.

In the Type-C interface of the Thunderbolt and USB3.* protocols, they transmit video signals through the DP protocol channel for digital picture transmission. Strictly speaking, it is also a special interface form of the DP protocol. Thunderbolt 2 supports DisplayPort 1.2, while Thunderbolt 3 and full-featured Type-C that follow the USB3.1 specification support the latest DP3.0 protocol, but there is a difference in bandwidth between the two (Thunderbolt is 40GB/s, USB3.1 is 10GB/s), there are certain differences based solely on video transmission capabilities. We will talk about this later.

After years of development, although the basic forms of the DP interface and HDMI interface have hardly changed, there are huge differences in the transmission capabilities of interfaces with different protocol versions. Therefore, when we are considering which of the two is more suitable, we also Specific versions must be taken into account.

Both DP and HDMI standards are backward compatible. The HDMI1.0 cable you bought ten years ago can still be used with the latest RTX30 series graphics cards. However, due to the barrel effect of connections between monitors, cables, and graphics cards, the maximum display capability can only be the lower of the two.

Such a connection may mean that a 4K gaming monitor that supports 144 Hz and HDR paired with an RTX3080 will ultimately only be able to run in 4K and 24Hz modes, completely unable to unleash the true capabilities of the two pieces of hardware. This example may be extreme, but it directly reflects the key to choosing HDMI or DP: whether the performance of the hardware can be fully utilized.

The picture below shows the relevant information about DP interfaces and HDMI in the past. To avoid the embarrassing situation mentioned above, you can quickly find the supported display modes in this table.

The most important parameters of these two interfaces in each generation of interfaces are transmission rate and data rate. In the early DP and HDMI annotations, digital signals were mostly transmitted using 8b/10b bit rate encoding. In 8b/10b encoding mode, it means that every 8 bits of data require 10 bits of transmission bandwidth in actual transmission, and these extra redundancies are used to ensure signal integrity, which means they only have 80% of the theoretical bandwidth Can be used to transfer data.

Under the latest protocol, DP 2.0 uses 128b/132b for transmission, and the coding efficiency is increased to 97%, while HDMI 2.1 uses 16b/18b for transmission, and the coding efficiency is 88.9%. Although HDMI is also equipped with an auxiliary channel for transmission Other data, but the impact on the data rate is not great. In addition, the DP interface of the same generation generally has a higher transmission rate, so the latest generation DP interface has a higher data rate than HDMI. To understand this, we Need to better understand the meaning of transmission bandwidth.

The meaning of data transmission bandwidth

All video data transmitted using digital transmission methods (including DP, HDMI and DVI interfaces) requires a certain data bandwidth. Each pixel on the display has three data points: red, green and blue (RGB). , or use the three data points of brightness, blue chromaticity difference and red chromaticity difference (YCbCr/YPbPr) for data transmission.

At the output end, no matter what operations are performed inside the graphics card, the final generated data will be converted into signals for display. These real-life signals generally contain a 16-bit RGBA information (where A is alpha, which means transparency information).

The most common standard currently uses 24-bit color. In this mode, the red, green and blue component data of each pixel occupies 8 bits of data respectively. This is also the source of the bit depth data in our computer display data. In HDR and high color depth displays, in order to display richer color data, the data occupation of each color is increased to 10-bit data, which means that using 30-bit color for each pixel can bring better display effects.

In some top-level professional monitors, the bit depth has even been increased to 12-bit and 16-bit, but it is still very niche at present. Most of the products that our ordinary consumers have access to are mainly 8-bit, and a small number of high-color accuracy and HDR monitors are 10-bit. , in this case, the display signal uses 24 or 30 bits of data per pixel. By multiplying this number by the number of pixels and the screen refresh rate, we can quickly calculate the minimum bandwidth required to achieve this transmission. .

Taking the above monitor as an example, the bandwidth required to achieve a display effect of 3440 * 1440 @ 100 Hz: the required bandwidth (amount of data per second) in 8bit mode is 24*3440*1440*100Hz=118886450000bps≈13.8Gbps, The required bandwidth in 10bit mode is 30*3440*1440*100Hz=14860800000bps≈17.3Gbps.

When the cable or output interface cannot meet the demand, the only choice is to reduce the resolution, reduce the refresh rate and turn off HDR. In the case where I use a laptop to connect, I use the HDMI2.0 interface (14.4Gbps). The 3440*1440@100 Hz mode can be turned on smoothly when connected, but when connected using the full-featured Type-C (USB3.1 protocol), due to the limitation of the interface bandwidth (10Gbps), only the 3440*1440@ 60Hz display can be turned on. model

Moreover, in practical applications, it is not rigorous enough to meet this minimum theoretical transmission bandwidth. In practical applications, a certain amount of redundancy is needed, and more complex calculations are required to determine the required bandwidth. In order to intuitively reflect the bandwidth requirements, video The Video Electronics Standards Association (VESA) has launched a set of intuitive reference standards.

Simply check this table with the interface specification bandwidth shown above to quickly confirm the applicable resolution. If the required data bandwidth is less than the maximum data rate supported by the standard, this resolution and frame rate can be used normally.

It should be noted that the standard established in the above figure is based on uncompressed signals. In order to output higher-resolution images under limited hardware bandwidth conditions, the HDMI and DP interfaces also add display stream compression technology (Display Stream Compression (DSC) support, this technology can help devices better overcome the physical limitations caused by interface bandwidth. DP has added support for DSC1.2 as early as version 1.4, and HDMI has also added support for this technology in the latest version 2.1.

At 8K and 60Hz, in the basic 8bit mode, a data bandwidth of 49.65 Gbps is required. When the 10bit HDR mode is turned on, a bandwidth of 62.06 Gbps is required. When 8K+120 Hz+10bit HDR is turned on, in the uncompressed state The transmission requires a transmission bandwidth of up to 127.75 Gbps, and currently there is no interface that can meet this demand.

By converting to 4:2:2 or 4:2:0 YCgCo and using incremental PCM encoding, DSC can provide a compression ratio of up to 3:1, claiming to provide a “visually lossless” effect, using DSC to allow 8K The required bandwidth of +120Hz+HDR is only 42.58 Gbps, which enables this ultimate display effect to be achieved under the existing HDMI2.1 and DP2.0 interfaces.

In addition to transmitting video, HDMI and DP also need to reserve bandwidth to transmit digital audio data. Under the current standards, they currently use up to 36.86 Mbps (0.037 Gbps) of bandwidth audio for audio. Although it will occupy the overall bandwidth, the overall impact is not Not big.

It is not difficult to see from the above introduction that the bandwidth calculation method of simple resolution * refresh rate * color depth is obviously not comprehensive enough, because the specific performance also takes into account factors such as timing standards, encoding methods, and audio bandwidth occupation. Although it occupies Bandwidth is still the bigger the better, but it is obviously not the only reference standard that affects the video signal transmission capability.

Advantages and Disadvantages of DP and HDMI Interfaces

In the current mainstream market, DP1.4 is the most powerful and common version of the DisplayPort standard. Although the DP2.0 specification was released in June last year (2019), there is still no consumer support for this interface. Class graphics cards and monitors were introduced.

The RTX30 series graphics cards launched some time ago are still equipped with the DP1.4 interface. Although the available bandwidth is not as good as the HDMI 2.1 installed at the same time, the performance of up to 8K@65Hz can already meet the current needs.

However, 8K display equipment is currently mainly based on TVs, which basically use the HDMI 2.1 interface to achieve 8K signal transmission. And based on the past performance of DP in the TV market, it is unlikely that there will be products using DP2.0 launched in the short term. , in other words, before the large-scale popularization of DP2.0, the extreme transmission performance of DP will not be comparable to HDMI2.1 for the time being.

VRR Technology

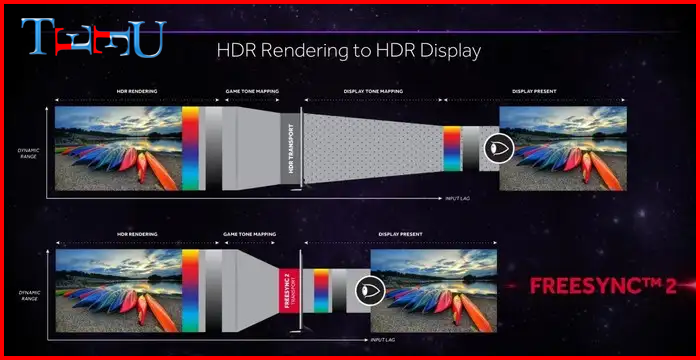

One of the advantages of DP is native support for variable refresh rate (VRR). This technology has become part of the DP standard after DP1.2a. This is also the basis for the large-scale popularization of FreeSync technology (compatible with G-Sync) in recent years. To use this technology, you must use the DP interface.

In addition, due to the addition of a fixed snap switch, the stability of the DP interface and cable is better than that of HDMI. The risk of “breaking off” if accidentally pulled like HDMI is almost non-existent.

Moreover, DP can also connect multiple screens to a single port through multi-stream transmission (MST). Some monitors also support the DP serial function based on this technology (monitors are directly connected through the DP interface). Which will be even better in terms of scalability.

Since HDMI requires a certified standard protocol, and DP is an open standard protocol, many innovations in display technology (such as DSC, G-Sync and FreeSync) will first appear on DP and then slowly appear on HDMI. important reasons.

There is also a key parameter on the DP cable that limits its use scenarios. Its maximum length is limited to less than 3m under current standards. This factor makes it unlikely to be used in home theaters, long-distance signal transmission and other application scenarios. In 99% of cases, it can only be used between a desktop host and a monitor, which indirectly limits the application of this interface on devices such as TVs and projectors.

In terms of the most important transmission bandwidth, due to the latecomer advantage of the DP protocol, HDMI has always been slightly inferior to DP in terms of bandwidth performance during the same period. HDMI2.1’s preemptive launch this time can be regarded as a win. Although it has always been “laggard” in terms of paper parameters, when running at non-extremely high resolution + refresh rate, there is actually no perceptible difference between the currently most mainstream DP1.4 and HDMI2.0.

Since the release of HDMI 2.0 in 2013, the HDMI interface has been able to achieve 4K@60 Hz transmission effect in 8-bit color, and achieve the highest 8K@30Hz display effect in 4:2:0 YCbCr output mode, but there will be edge viewing. Looks blurry.

Regarding the lack of variable refresh rate technology, HDMI has also added support for FreeSync and G-Sync technology through AMD’s expansion chip starting from HDMI 2.0b, and included this technology in the new standard in the HDMI 2.1 protocol.

So far, only some of NVIDIA’s RTX20 series and the latest RTX30 series graphics cards will support the HDMI2.1 interface (some non-public graphics cards are only equipped with HDMI2.0 interfaces), and most monitors are still equipped with HDMI2.0 interfaces. Therefore, taking into account the comprehensive functionality, the best way to use a desktop computer to connect a monitor is still DP.

Although it has not been dominant in theoretical performance for a long time, the HDMI specification has extremely high popularity and compatibility. When the standard was first launched in 2004, millions of devices with HDMI were already shipped, and by 2020 , devices with HDMI can be seen everywhere, and mainstream real-world devices will be equipped with this interface.

Although there are no products that support the full specification HDMI2.1 interface on graphics cards, a large number of HDMI 2.1 devices have been launched for the first time in consumer-grade equipment such as TVs, Blu-ray players, and home theaters. The popularity and versatility will still be higher than that of DP in the short term. powerful.

The biggest advantage of HDMI over DP in terms of practicality is that the cable length can be up to 15m, which is five times longer than DP cables. This feature is very important for players who use desktop computers to connect monitors, but it is also very important for scenarios such as home theaters that require long-distance connections, giving this cable a richer range of application scenarios.

DP vs. HDMI: How do gamers choose?

We have introduced the technical parameters of DisplayPort and HDMI, and everyone has a full understanding of these two transmission protocols. So back to the original question, but which connection method is actually more suitable for gamers?

Some of this depends on the hardware you already own or plan to purchase. Both standard protocols can provide an excellent gaming experience, but if you want to get an excellent gaming experience, among the products currently available on the market, HDMI 2.1>DP 1.4>DP 1.3>HDMI 2.0, and the currently released Among the protocols, DP 2.0 should have the best theoretical performance, but the popularity of this protocol is still unclear.

In some high-resolution + high-definition application scenarios, gamers need to choose according to their own platform.

For gamers using N cards, the best solution currently is to use the DP 1.4 interface to connect to a monitor equipped with G-Sync function. The new HDMI is only practical when connected to a TV, because currently through HDMI 2.1 The only monitor that is compatible with G-Sync is TV. On ordinary monitors, only using DP can fully utilize the characteristics of the N card.

The choice for A-card gamers is relatively loose, because currently monitors with FreeSync function can be turned on through the HDMI interface, so there is not much difference in functionality and practicality when using A-card with DP 1.4 and HDMI 2.0. However, DP will still be the preferred standard for PC monitors, because many HDMI FreeSync monitors can only operate at lower resolutions or refresh rates, and there are fewer products above 144Hz.

If you already own a monitor with a refresh rate below 144hz that does not support G-Sync or FreeSync functions, and the monitor supports both HDMI and DP inputs, and the graphics card is also equipped with both interfaces, this connection method is The choice becomes less important.

With a resolution of 2560 * 1440 (1440P) @144 Hz and 8bit color depth, protocols above DP1.2 and HDMI 2.0 can operate normally, and any connection type below this value will work normally. There is indeed no practical difference in using these two interfaces (theoretically the signals that can be transmitted are the same).

In the scenario of using a desktop computer with a monitor, the DP interface is obviously the best choice , which can fully exert the performance of the output end. Therefore, current graphics cards will be equipped with more DP interfaces. Although the new RTX 30 series graphics cards support HDMI 2.1 interface, But generally only two or less will be equipped.

In scenarios where the host is connected to large screens such as TVs and projectors, HDMI will still be the best choice for a long time because it has a longer transmission distance and more convenient wiring. The most important thing is that HDMI has The device compatibility far exceeds that of the DP interface. Currently, devices with screens over 50 inches are rarely equipped with a DP interface. HDMI will still be the preferred interface for a long time to come.

Ultimately, although DP has regulatory advantages, HDMI’s excellent compatibility and convenience can help it have richer application scenarios. The two standards overlap in many fields and technologies.

The VESA organization responsible for formulating the DisplayPort standard mainly considers application scenarios in the PC field. While the Consumer Electronics Alliance formulates the HDMI protocol. Which will definitely give priority to consumer-grade devices such as TVs and projectors. Their different concerns ultimately lead to the two The protocol has different application scenarios that are segmented.

If you want high scores, high color gamut and comprehensive functional experience, use DP. If you need large screen and strong compatibility, use HDMI. Game enthusiasts can choose according to the usage scenario. But for more than 90% of ordinary users, it will affect the display effect. The bottleneck may be in the graphics card and monitor. So don’t be too entangled in the choice between the two.

One thought on “DP vs HDMI: Which one is the best choice for gamers?”